Smarter, Safer Driving with AI

At Jeenish AI Solutions, we play a critical role in the advancement of autonomous driving systems by providing meticulously labeled datasets tailored to the unique challenges of real-world navigation. Our services fuel the perception and decision-making layers of self-driving AI, enabling vehicles to interpret and respond to complex environments with human-level accuracy.

2D Bounding Box Annotation

We annotate vehicles, pedestrians, traffic lights, bicycles, road signs, and other critical objects using tight bounding boxes across still images or video frames. This data is essential for training object detection models.

Example: Annotating cars and pedestrians on urban roads from dashcam footage for object detection.

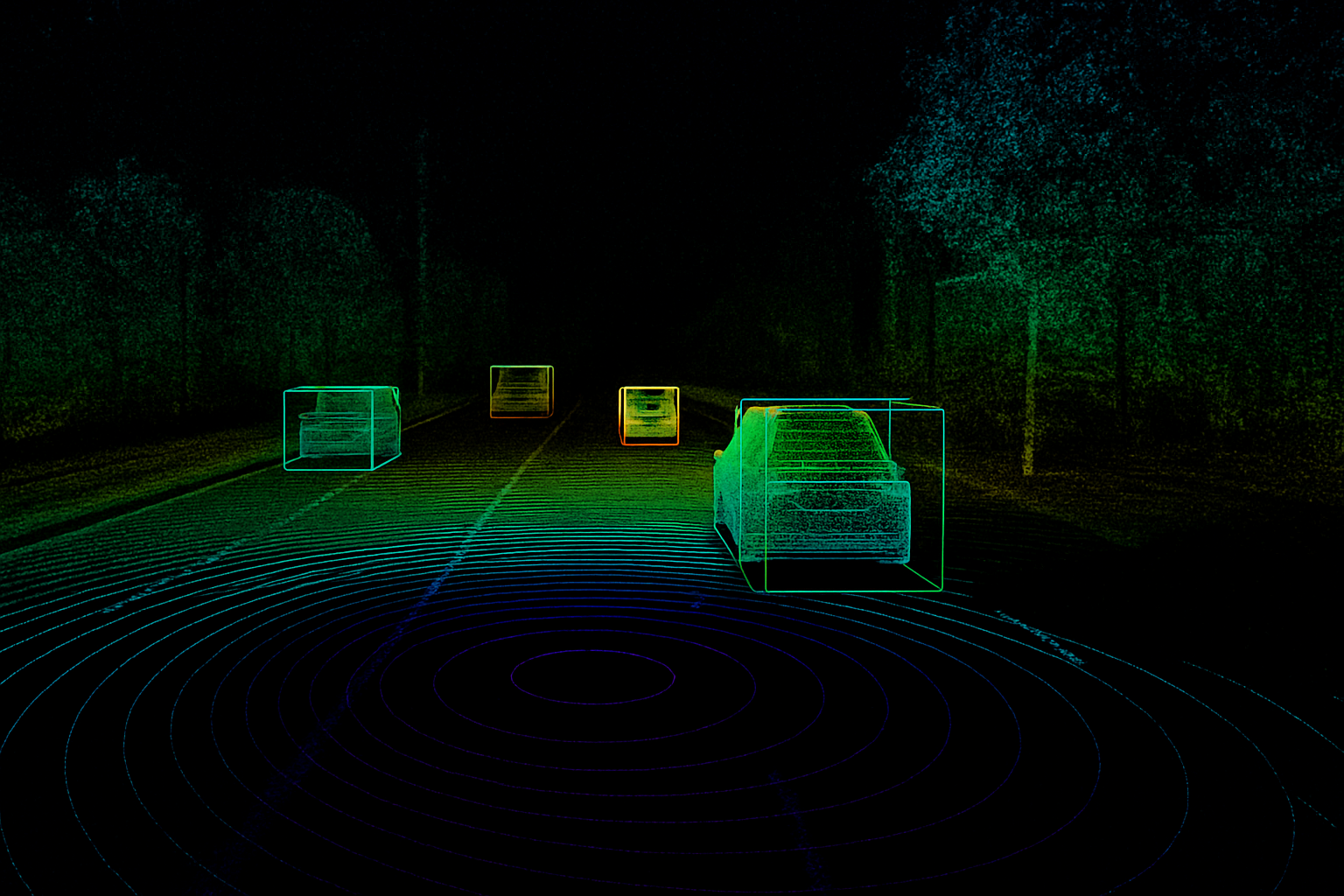

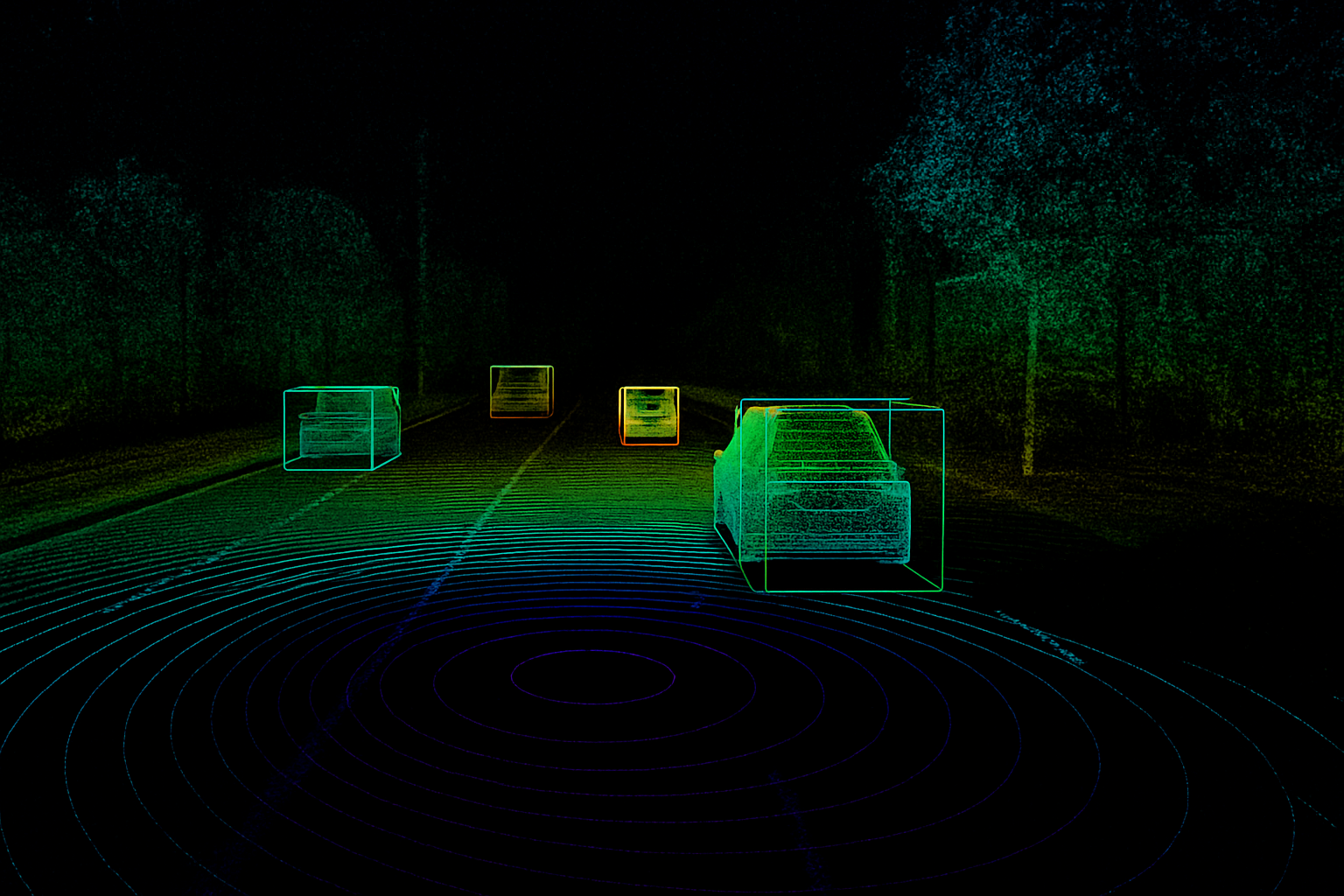

3D Cuboid Annotation (Lidar & Camera Fusion)

For depth-aware perception, we place 3D cuboids over objects within LiDAR point cloud data. These annotations give autonomous systems a spatial understanding of object size, orientation, and trajectory.

Example: Bounding buses and motorbikes in LiDAR scans to support real-time tracking in fog or low-light conditions.

Semantic Segmentation

We provide pixel-level annotation to segment roads, sidewalks, crosswalks, lane markings, and barriers. This is crucial for path planning, lane keeping, and obstacle avoidance.

Example: Segmenting roads vs. off-road terrain in snowy environments to help vehicles choose safe paths.

Polyline Annotation (Lane Detection)

Our annotators draw polylines along lane markings, curbs, and road edges to train lane detection and trajectory prediction systems.

Example: Drawing polylines along diverging expressway lanes to enable accurate autonomous lane changes.

Video Frame Annotation & Object Tracking

We annotate object positions frame by frame to track their movement across time. This helps in understanding object velocity, direction, and interactions with other objects.Example: Tracking a pedestrian crossing the street while a cyclist overtakes from behind to support collision avoidance.

Activity Recognition

We label activities such as 'person crossing,' 'vehicle braking,' or 'cyclist turning,' enabling autonomous systems to interpret context and predict behavior.Example: Recognizing a person about to jaywalk or a vehicle parked hazardously on the roadside.

Dataset Design, Cleanup & QA

We help AV teams design task-specific datasets—e.g., night driving, construction zones, or high-traffic intersections—and provide ongoing validation and QA to ensure precision at scale.Example: Designing a dataset of nighttime delivery truck scenarios with rare corner cases like parked emergency vehicles.

Multilingual Transcription (for Voice Interfaces)

For AVs with voice control systems, we provide multilingual voice-to-text transcription and intent tagging for natural language commands.Example: Transcribing in-car voice commands like “navigate to nearest charging station” across languages like Spanish, Hindi, and German.

Why It Matters?

Autonomous vehicles rely on split-second data interpretation. Our expert-verified annotations reduce edge-case errors, improve generalization, and provide the diverse, high-quality input required to train robust, production-grade AV systems—backed by scalable pipelines, multilingual capability, and human-in-the-loop precision.